HSBC and IBM have reported a 34% improvement in fill-rate modelling for bond request-for-quote (RFQ) using quantum-generated features and common data science algorithms.

The bank–technology partnership claims this is the world’s first public industrial scale quantum computing-enabled market making model. The research paper supporting the news say that using IBM’s Heron quantum computer, they get a 34% improvement in predicting whether a dealer’s RFQ quote will be filled.

Philip Intallura, HSBC’s group head of quantum technologies, said: “This is a ground-breaking world-first in bond trading. It means we now have a tangible example of how today’s quantum computers could solve a real-world business problem at scale and offer a competitive edge, which will only continue to grow as quantum computers advance.”

The research is a common quant problem when modelling market making: By estimating their fill probability they can better tailor the inventory risk in their book by tuning their liquidity supply aggressiveness.

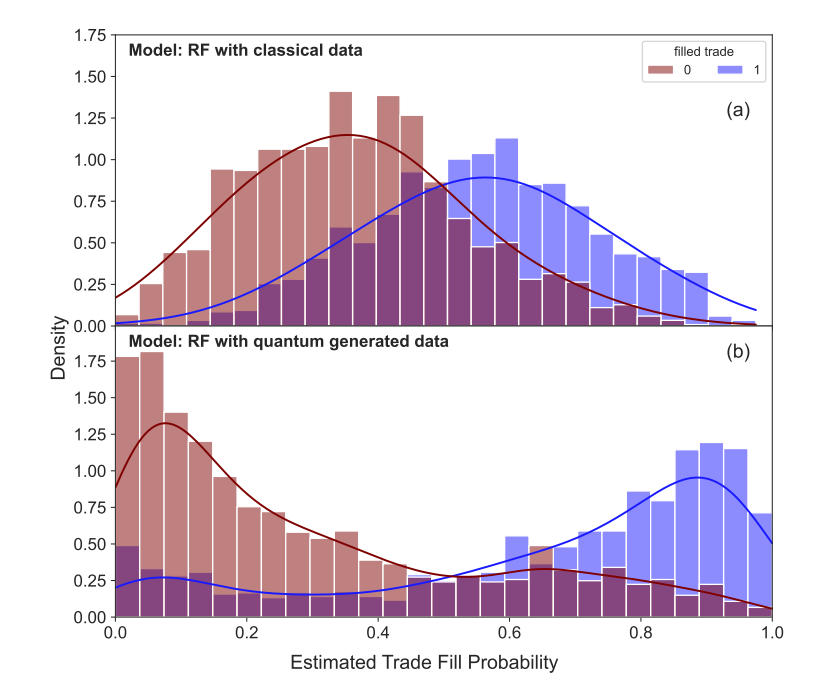

Rather than changing models, HSBC and IBM pre-process their historical data using a quantum circuit and feed the transformed features into standard data-science tools – logistic regression, gradient boosting, random forest, neural networks. They compare their performance results using area under the curve in rolling, time-ordered back tests.

Their dataset is made up of 1.7 million request-for-quotes (RFQs) over 294 trading days from September 2023 to October 2024. The RFQs are primarily European corporate bonds orders.

For their analysis, they first encode the market state of each RFQ into the quantum circuit. The requests are represented by a 216-feature strong snapshot comprising trade attributes (from ticker to sector) and short- to long-horizon time lagged data that reflect market dynamics and past buy and sell-orders behaviour.

They then use IBM’s quantum computer to perform a nonlinear transformation to generate 327 quantum features per event.

Their empirical observations show that quantum-generated features are smoother and closer to normal distributions than the usual features used by models. They report results are stronger when quantum computer natural noise is not cleaned out. The authors suggest that hardware noise, combined with circuit depth, acts like a regulator in amplifying weak signals and reducing overfitting.

The quantum-enhanced inputs, when fed to classical data science models delivered the largest relative area-under-the-curve (AUC) improvements (up to ~34%) in fill rate prediction versus plain data, or the noise-reduced quantum simulation.

While the result is empirical rather than a formal proof of quantum advantage, their work promises to entice competitive-edge hungry traders.

©Markets Media Europe 2025